Early evidence suggests the Online Safety Act is failing its primary goal of child protection, with VPN usage surging 1,800% allowing easy bypass of restrictions while vulnerable users lose access to legitimate support communities. Technical failures include facial recognition systems fooled by video game screenshots.

While the law pushes harmful content to unregulated platforms rather than eliminating it. Only 24% of Britons believe the Act effectively protects children, raising questions about its £billions compliance cost versus actual safety benefits.

Free VPN Apps Easily Bypass Age Verification

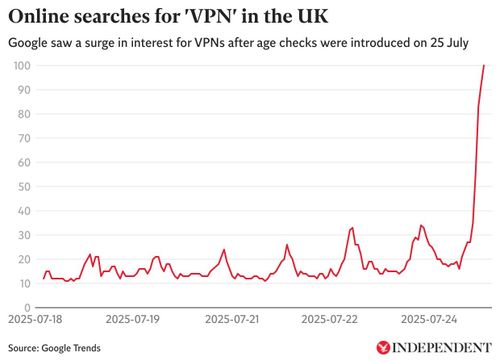

The most glaring evidence of the Online Safety Act’s ineffectiveness is the ease with which its restrictions can be bypassed through VPN usage, which surged 1,800% following enforcement. This massive increase demonstrates that motivated users including the teenagers the law aims to protect can readily circumvent age verification systems using widely available and often free VPN services.

Five of the top ten free apps on Apple’s UK App Store became VPN services following the Act’s implementation, indicating that bypassing government internet restrictions has become mainstream behavior among British internet users. If the primary users adopting circumvention technology are law-abiding citizens seeking to maintain privacy rather than predators seeking to harm children, the Act’s fundamental premise is undermined.

Technical Failures Expose Fundamental Flaws

Facial recognition age verification systems have proven embarrassingly vulnerable, with users successfully fooling supposedly sophisticated biometric analysis using screenshots from video games like Death Stranding. These technical failures highlight the fundamental contradiction in age verification systems: they must be sophisticated enough to prevent bypass attempts while remaining simple enough for legitimate users to complete.

The gaming community’s discovery that fictional video game characters can pass as human adults in verification systems exposes the broader inadequacy of biometric age estimation technology. If systems cannot distinguish between real people and computer-generated images, their reliability for protecting children is fundamentally compromised.

Has the UK Porn Ban Failed?

Comprehensive analysis of whether UK internet restrictions achieve their stated goals.

Displacement Rather Than Elimination

Rather than eliminating risks, the legislation has created a displacement effect where harmful content and predatory behavior simply migrate to platforms and services that operate outside UK jurisdiction or implement minimal compliance measures. This whack-a-mole approach pushes dangerous activities into darker corners of the internet where they are less visible to law enforcement and child protection services.

The restriction of legitimate educational and support content may actually increase harm to vulnerable young people who lose access to peer support networks and professional guidance that could provide crucial intervention during crisis periods. When mental health communities and addiction recovery resources require government ID verification, the most vulnerable users are effectively excluded from help.

Public Confidence In The Safety Act Crumbles

Polling data shows declining public trust in the Online Safety Act’s effectiveness, with only 24% of Britons believing the legislation effectively protects children, down from 34% before implementation. This erosion of public confidence reflects growing awareness of the Act’s practical limitations and unintended consequences, including privacy violations and legitimate content restrictions.

The gap between promised and delivered results has become increasingly apparent as users experience the Act’s impact firsthand. When parents discover that their teenagers can easily bypass restrictions using free VPNs while struggling to access legitimate educational content, public support for the government’s approach inevitably declines.

Economic Costs vs. Safety Benefits

The enormous compliance costs imposed on digital platforms (estimated in the billions globally) must be weighed against the Act’s minimal demonstrable impact on child safety. When small community forums close entirely rather than implement costly compliance systems, the Act destroys valuable digital resources while providing no measurable protection benefits.

Innovation and competition suffer as startups and smaller platforms cannot afford compliance costs that established tech giants can absorb as routine business expenses. This market concentration effect ultimately reduces choice and innovation in digital services while failing to achieve the Act’s stated safety objectives.

Comparative International Evidence

Countries with different approaches to online child protection provide useful comparison points for evaluating the UK’s strategy. Nations focusing on education, digital literacy, and targeted law enforcement rather than mass surveillance and content restriction often achieve better safety outcomes without sacrificing privacy rights or internet freedom.

The international evidence suggests that effective child protection requires collaboration between schools, parents, law enforcement, and technology companies rather than government mandates for age verification and content censorship. Educational approaches that teach children to recognise and avoid online dangers may be more effective than technological solutions that can be easily bypassed.

Unintended Harm to Vulnerable Users

The Act’s age verification requirements have created new barriers for vulnerable users seeking help, including LGBTQ+ youth accessing support communities, addiction recovery participants, and sexual assault survivors seeking peer support. These groups often specifically require anonymity and privacy protections that age verification systems systematically undermine.

Mental health professionals have expressed concern that age verification barriers may prevent young people from accessing crisis intervention resources during mental health emergencies. When accessing help requires surrendering government identification to third-party companies, vulnerable users may choose to remain isolated rather than risk exposure and stigmatisation.

The Road Forward

Alternative approaches to child protection that preserve privacy rights and internet freedom deserve serious consideration as evidence mounts that the current strategy is ineffective. Focus should shift toward education, parental tools, improved law enforcement resources, and platform design changes that protect children without creating mass surveillance infrastructure.

The lesson from the UK experiment may ultimately be that technological solutions cannot substitute for human approaches to child protection that involve families, schools, and communities working together. Rather than continuing to expand failed surveillance and censorship measures, policymakers should acknowledge the Act’s limitations and pursue evidence-based alternatives that actually protect children while preserving digital rights for all users.

Related Resources:

- Ofcom Compliance Data – Official statistics on enforcement and compliance

- Internet Society Effectiveness Analysis – Technical assessment of the Act’s impact

- Open Rights Group Monitoring – Civil liberties organization tracking

- UK Parliament Committee Reviews – Legislative oversight and assessment

- Academic Research Portal – Scholarly analysis of digital regulation effectiveness